Data Scientist at Smartail.AI

Smartail.AI is an AI-driven company specializing in data analysis and automation. As a Data Scientist Intern, I worked on processing and analyzing masked data extracted from handwritten student documents. My role involved leveraging Postman to automate API requests, facilitating seamless integration of processed data into Docker containers for efficient deployment and system-wide accessibility.

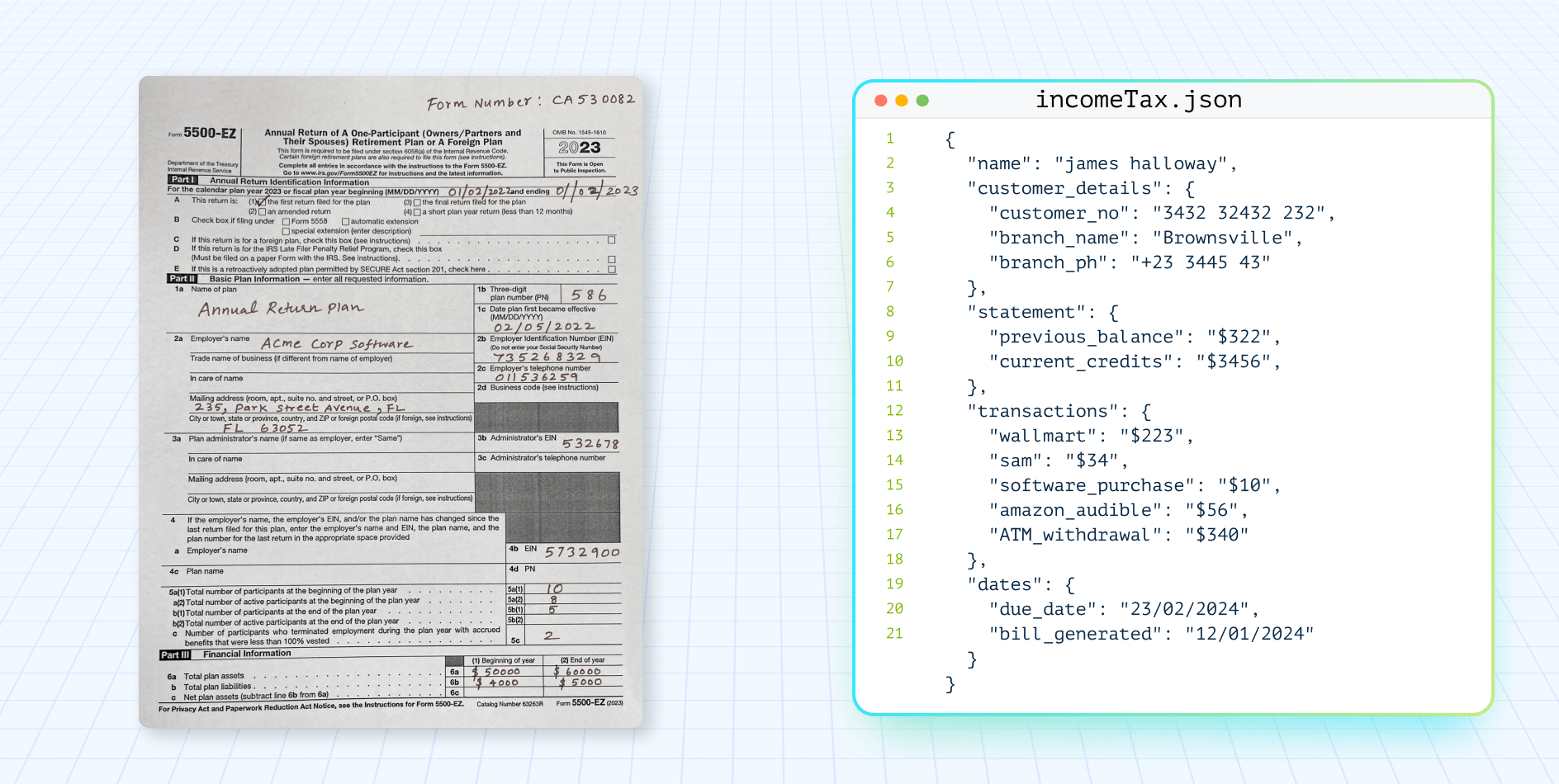

- Worked on Masked Data Processing: Preprocessed handwritten documents using OCR-based techniques to extract structured data while ensuring data anonymization.

- Used API Automation with Postman: Designed and automated API requests to facilitate data pipeline workflows, reducing manual intervention.

- Implemented Docker Integration: Deployed processed data into Dockerized environments, ensuring smooth interaction with backend systems.

- Optimized Data Workflows: Enhanced the efficiency of data ingestion, validation, and transmission, reducing system overhead.

Code Block: Automating Data Processing and API Requests using Postman & Python

import requests

import json

# API Endpoint for Data Upload

api_url = "https://api.smartail.ai/data/upload"

# Sample Data Payload

data_payload = {

"student_id": "12345",

"document_type": "handwritten",

"masked_data": "*****",

"processing_status": "Completed"

}

# API Headers

headers = {

"Content-Type": "application/json",

"Authorization": "Bearer YOUR_ACCESS_TOKEN"

}

# Sending API Request using Postman-style automation

response = requests.post(api_url, headers=headers, data=json.dumps(data_payload))

# Print Response

if response.status_code == 200:

print("Data Successfully Uploaded:", response.json())

else:

print("Error in Uploading Data:", response.status_code, response.text)

Geometric Cues: Lifting image votes to 3D

- Implemented Masked Data Handling: Applied anonymization techniques to ensure sensitive information is protected.

- Automated Postman for API Testing: Developed Postman scripts to streamline data transmission and validation.

- Integrated Dockerized System: Ensured smooth deployment by integrating processed datasets into containerized environments.

- Optimized Scalability & Automation: Streamlined data pipelines for batch processing and real-time updates.

Things learnt during this internship:

- Data Extraction & Masking from handwritten documents

- API automation & integration using Postman & Python Requests

- Efficient Data Processing Pipelines for Dockerized environments

- Hands-on experience with large-scale data workflows

- Collaboration with AI-driven data teams to optimize processing efficiency

This opportunity provided me with hands-on experience and trained me to work in high-tech research facilities.