Project Intern at ISRO-URSC

The Indian Space Research Organisation (ISRO) is a government-run space agency

that has launched several prestigious rockets, including Chandrayaan, Mangalyaan, PSLV,

and more. I worked as a project intern for a month, where my role involved converting

2D Flash LiDAR data into 3D point clouds using the VoteNet algorithm.

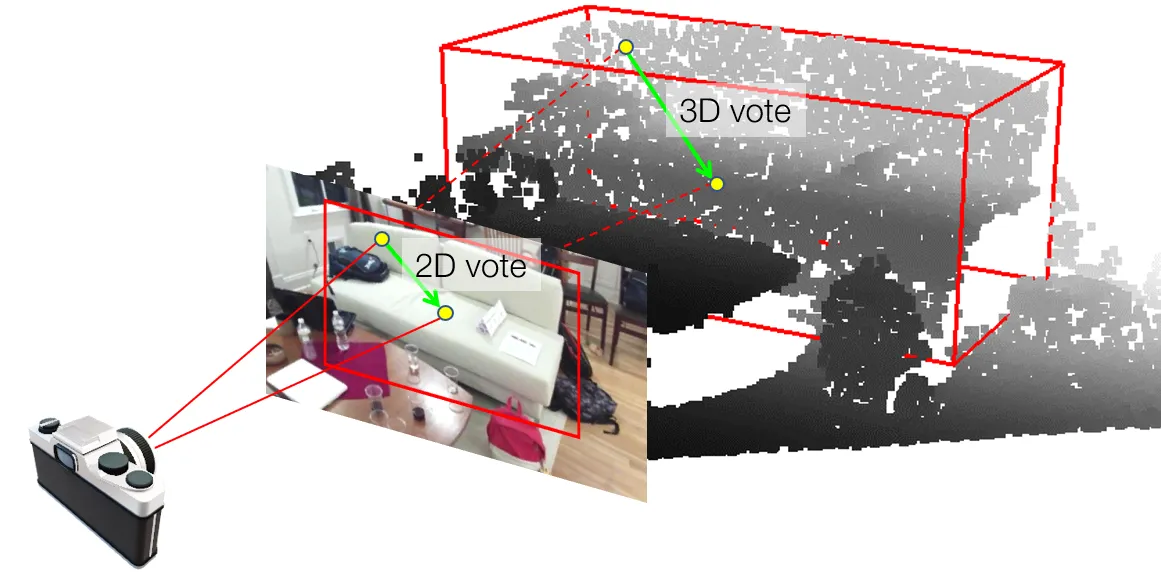

The term ImVoteNet is a blend of the words "Image" and "VoteNet." Here, "Image"

refers to a simple RGB image, while

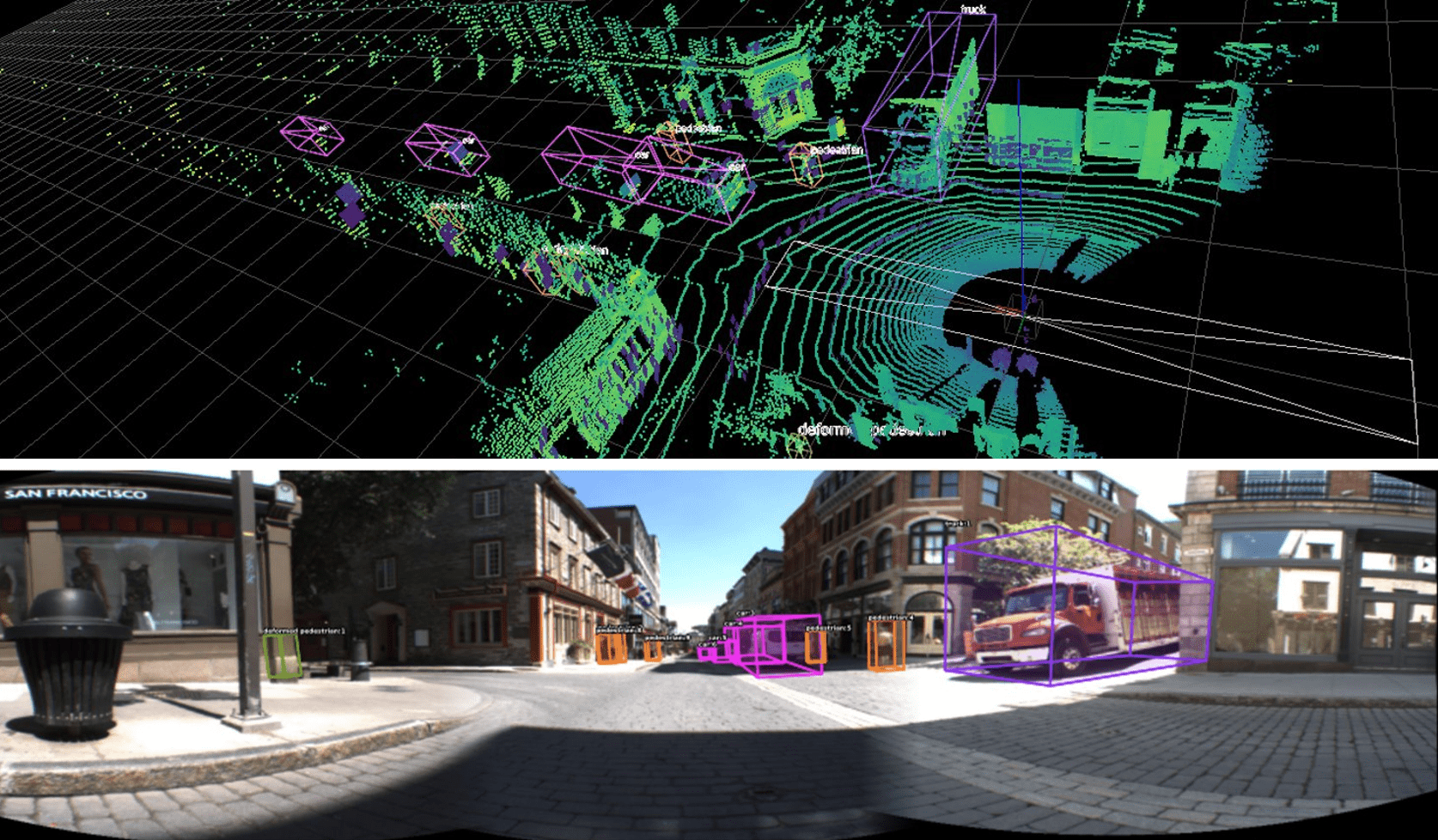

- Worked on Flash LiDAR and Leddar PixSet dataset.

- Used Votenet algorithm.

- Converted 2D images to 3D pointcloud data.

Code Block Example for loading 2D images to 3D pointcloud

You can get more info at https://github.com/facebookresearch/votenet.

def pre_load_2d_bboxes(bbox_2d_path):

"""

The function aims to filter out all detections from the .txt file that have an

objectness_score < 0.1. It then creates separate lists to store the class id corresponding

to the class label in the cls_id_list, objectness_score in the cls_score_list and

2d_bounding box values in the bbox_2d_list for all objects with objectness_score > 0.1.

:param bbox_2d_path: Path to the .txt file discussed earlier which contains the

2D bounding box info.

"""

print("pre-loading 2d boxes from: " + bbox_2d_path)

# Read 2D object detection boxes and scores

cls_id_list = []

cls_score_list = []

bbox_2d_list = []

for line in open(os.path.join(bbox_2d_path), 'r'):

det_info = line.rstrip().split(" ")

prob = float(det_info[-1])

# Filter out low-confidence 2D detections

if prob < 0.1:

continue

cls_id_list.append(type2class[det_info[0]])

cls_score_list.append(prob)

bbox_2d_list.append(np.array([float(det_info[i]) for i in range(4, 8)]).astype(np.int32))

return cls_id_list, cls_score_list, bbox_2d_list

Geometric Cues: Lifting image votes to 3D

The 2D object center in the image plane can be represented as a ray in 3D space connecting the 3D object center and the camera optical center with the help of the intrinsic camera matrix.

Let,

P = (x1, y1, z1) — point on the surface of the object in the point cloud

C = (x2, y2, z2) — center point of the 3D object

p = (u1, v1) — projection of point P on the 2D image

c = (u2, v2) — projection of point C on the 2D image

We can observe that 2D voting reduces the search space of the center of the 3D object to a line (line OC) where only the z value is changing.

Code Example for Geometric Cues: Lifting image votes to 3D

You can get more info at 3D Object Detection in Point Clouds with Image Votes.

MAX_NUM_PIXEL = 530*730 # maximum number of pixels per image

max_imvote_per_pixel = 3 # pixels inside multiple boxes are given multiple votes with a maximum value of 3

vote_dims = 1 + max_imvote_per_pixel*4

def get_full_img_votes_1d(full_img, cls_id_list, bbox_2d_list):

"""

We loop over every detection by considering it as a seperate image (i.e obj_img).

Image vote vector (center coordinates - pixel coordinates) and certain meta data for every detection is stored in

in a list (obj_img_list). This collected data is in turn looped over to extract image votes.

:param full_img: 3 channel RBG Image

:param cls_id_list, bbox_2d_list: return value of pre_load_2d_bboxes function

:return: geometric cues

"""

obj_img_list = []

for i2d, (cls2d, box2d) in enumerate(zip(cls_id_list, bbox_2d_list)):

xmin, ymin, xmax, ymax = box2d

obj_img = full_img[ymin:ymax, xmin:xmax, :]

obj_h = obj_img.shape[0]

obj_w = obj_img.shape[1]

# Bounding box coordinates (4 values), class id, index to the semantic cues

meta_data = (xmin, ymin, obj_h, obj_w, cls2d, i2d)

if obj_h == 0 or obj_w == 0:

continue

# Use 2D box center as approximation

uv_centroid = np.array([int(obj_w / 2), int(obj_h / 2)])

uv_centroid = np.expand_dims(uv_centroid, 0)

v_coords, u_coords = np.meshgrid(range(obj_h), range(obj_w), indexing='ij')

img_vote = np.transpose(np.array([u_coords, v_coords]), (1, 2, 0))

img_vote = np.expand_dims(uv_centroid, 0) - img_vote

obj_img_list.append((meta_data, img_vote))

full_img_height = full_img.shape[0]

full_img_width = full_img.shape[1]

full_img_votes = np.zeros((full_img_height, full_img_width, vote_dims), dtype=np.float32)

# Empty votes: 2d box index is set to -1

full_img_votes[:, :, 3::4] = -1.

for obj_img_data in obj_img_list:

meta_data, img_vote = obj_img_data

u0, v0, h, w, cls2d, i2d = meta_data

for u in range(u0, u0 + w):

for v in range(v0, v0 + h):

iidx = int(full_img_votes[v, u, 0])

if iidx >= max_imvote_per_pixel:

continue

full_img_votes[v, u, (1 + iidx * 4):(1 + iidx * 4 + 2)] = img_vote[v - v0, u - u0, :]

full_img_votes[v, u, (1 + iidx * 4 + 2)] = cls2d

full_img_votes[v, u, (1 + iidx * 4 + 3)] = i2d + 1

# add +1 here as we need a dummy feature for pixels outside all boxes

full_img_votes[v0:(v0 + h), u0:(u0 + w), 0] += 1

full_img_votes_1d = np.zeros((MAX_NUM_PIXEL * vote_dims), dtype=np.float32)

full_img_votes_1d[0:full_img_height * full_img_width * vote_dims] = full_img_votes.flatten()

full_img_votes_1d = np.expand_dims(full_img_votes_1d.astype(np.float32), 0)

return full_img_votes_1d

Things learnt during this internship:

- Object Detection and Identification

- Converting 2D images to 3D pointclouds

- Training and Analyzing data

- Working with highly qualified scientists

- Witnessed Chandrayaan 3 Rocket Launch

This opportunity provided me with hands-on experience and trained me to work in high-tech research facilities.